Excerpt

What is a Markov Model?

A Markov Model is a set of mathematical procedures developed by Russian mathematician Andrei Andreyevich Markov (1856-1922) who originally analyzed the alternation of vowels and consonants due to his passion for poetry.

In the paper that E. Seneta [1] wrote to celebrate the 100th anniversary of the publication of Markov’s work in 1906 [2], [3] you can learn more about Markov’s life and his many academic works on probability, as well as the mathematical development of the Markov Chain, which is the simplest model and the basis for the other Markov Models.

In the late 1960s and early 1970s Leonard E. Baum and his colleagues studied, developed and extended the Markov techniques by creating new models such as the Hidden Markov Model (HMM) [4].

What are Markov Models used for?

Nowadays Markov Models are used in several fields of science to try to explain random processes that depend on their current state, that is, they characterize processes that are not completely random and independent. They are also not governed by a system of equations where a specific input corresponds to an exact output.

A deterministic model attempts to explain with precision and accuracy the behaviour of a process and a probabilistic or stochastic model attempts to determine by probability the behavior of a randomized independent process. In contrast, the Markov Model attempts to explain a random process that depends on the current event but not on previous events, so it is a special case of a probabilistic or stochastic model.

When we have a dynamic system whose states are fully observable we use the Markov Chain Model and if the system has states that are only partially observable we use the Hidden Markov Model.

For example, a dynamic system can be a price stream with a certain frequency, either minutes, hours, days or weeks or with an undetermined frequency such as ticks, which has observable states, such as if the price goes up, down or unchanged, although it can also be a price stream or a certain price figure. We will go into detail when we see how the Markov Chain works.

The Markov Model uses a system of vectors and matrices whose output gives us the expected probability given the current state, or in other words, it describes the relationship of the possible alternative outputs to the current state.

How does a Markov Model work?

Let’s start by naively describing how the simplest model, Markov Chain works. In this post, we are going to focus on some implementation ideas in Python but we are not going to stop at the formulation and mathematical development.

It is recommended to the interested reader to review the tutorial on Hidden Markov Models and Selected Applications in Speech Recognition by Lawrence R. Rabiner [6] to get a solid base on the mathematical foundations of the Markov Chain and the HMM.

With a Markov Chain, we intend to model a dynamic system of observable and finite states that evolve, in its simplest form, in discrete-time. We can describe it as the transitions of a set of finite states over time.

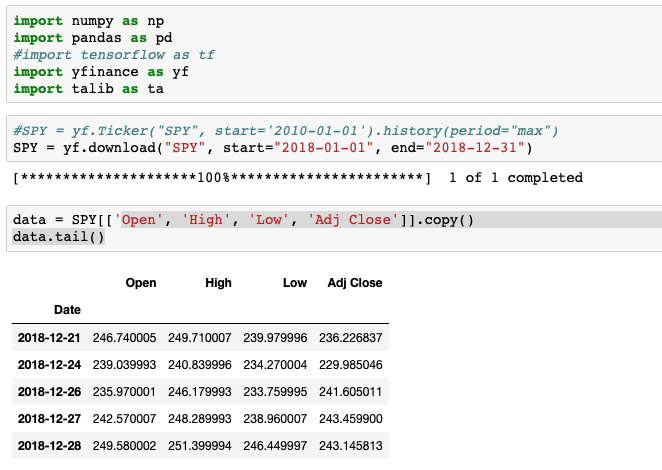

Let’s take a simple example to build a Markov Chain. Let’s say we have a series of SPY prices and we want to model the behavior to make predictions about the future price.

To do this, we need the frequency distribution of each possible state in time t. From this, we generate a transition matrix or probability matrix that can be multiplied iteratively by the original transition matrix, which allows us to extend the behaviour of the model in time and to know the probability of the states in time t+i.

The Markov Chain reaches its limit when the transition matrix achieves the equilibrium matrix, that is when the multiplication of the matrix in time t+k by the original transition matrix does not change the probability of the possible states.

Let’s get the 2018 prices for the SPY ETF that replicates the S&P 500 index.

Visit QuantInsti to download the ready-to-use code and read the rest of the article:

https://blog.quantinsti.com/markov-model/

References:

[1] Seneta, Eugene. (2006). Markov and the creation of Markov Chains.

[2] A.A. Markov, Extension of the law of large numbers to dependent quantities (in Russian), Izvestiia Fiz.-Matem. Obsch. Kazan Univ., (2nd Ser.), 15(1906), pp. 135–156 pp.

[3] A.A. Markov, The extension of the law of large numbers onto quantities depending on each other. Translation into English of [2].

[4] Baum, Leonard E., and Ted Petrie. 1966. Statistical inference for probabilistic functions of finite state Markov chains. The Annals of Mathematical Statistics 37: 1554–63.

Disclosure: Interactive Brokers

Information posted on IBKR Campus that is provided by third-parties does NOT constitute a recommendation that you should contract for the services of that third party. Third-party participants who contribute to IBKR Campus are independent of Interactive Brokers and Interactive Brokers does not make any representations or warranties concerning the services offered, their past or future performance, or the accuracy of the information provided by the third party. Past performance is no guarantee of future results.

This material is from QuantInsti and is being posted with its permission. The views expressed in this material are solely those of the author and/or QuantInsti and Interactive Brokers is not endorsing or recommending any investment or trading discussed in the material. This material is not and should not be construed as an offer to buy or sell any security. It should not be construed as research or investment advice or a recommendation to buy, sell or hold any security or commodity. This material does not and is not intended to take into account the particular financial conditions, investment objectives or requirements of individual customers. Before acting on this material, you should consider whether it is suitable for your particular circumstances and, as necessary, seek professional advice.

![[Gamma] Scalping Please [Gamma] Scalping Please](https://ibkrcampus.com/wp-content/smush-webp/2024/04/tir-featured-8-700x394.jpg.webp)