What exactly is forward propagation in neural networks? Well, if we break down the words, “forward” implies moving ahead, and “propagation” refers to the spreading of something. In neural networks, forward propagation means moving in only one direction: from input to output. Think of it as moving forward in time, where we have no option but to keep moving ahead!

In this blog, we will delve into the intricacies of forward propagation, its calculation process, and its significance in different types of neural networks, including feedforward propagation, CNNs, and ANNs.

We will also explore the components involved, such as activation functions, weights, and biases, and discuss its applications across various domains, including trading. Additionally, we will discuss the examples of forward propagation implemented using Python, along with potential future developments and FAQs.

This blog covers:

- What are neural networks?

- What is forward propagation?

- Forward propagation algorithm

- Forward vs backward propagation in neural network

- Forward propagation in different types of neural networks

- Components of forward propagation

- Applications of forward propagation

- Process of forward propagation in trading

- Forward propagation in neural networks for trading using Python

- Challenges with forward propagation in trading

- FAQs while using forward propagation in neural networks for trading

What are neural networks?

For centuries, we’ve been fascinated by how the human mind works. Philosophers have long grappled with understanding human thought processes. However, it’s only in recent years that we’ve started making real progress in deciphering how our brains operate. This is where conventional computers diverge from humans.

You see, while we can create algorithms to solve problems, we have to consider all sorts of probabilities. Humans, on the other hand, can start with limited information and still learn and solve problems quickly and accurately. Hence, we began researching and developing artificial brains, now known as neural networks.

Definition of a neural network

A neural network is a computational model inspired by the human brain’s neural structure, consisting of interconnected layers of artificial neurons. These networks process input data, adjust through learning, and produce outputs, making them effective for tasks like pattern recognition, classification, and predictive modelling.

What does a neural network look like?

A neural network could be simply described as follows:

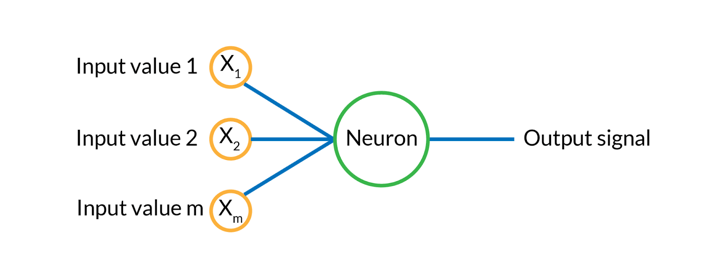

- The basic structure of a neural network is the perceptron, inspired by the neurons in our brains.

- In a neural network, there are inputs to the neuron, marked with yellow circles, and then it emits an output signal after processing these inputs.

- The input layer resembles the dendrites of a neuron, while the output signal is comparable to the axon. Each input signal is assigned a weight (wi), which is multiplied by the input value. Then the weighted sum of all input variables is stored.

- Following this an activation function is applied to the weighted sum, resulting in the output signal.

One popular application of neural networks is image recognition software, capable of identifying faces and tagging the same person in different lighting conditions.

Now, let’s delve into the details of forward propagation beginning with its definition.

What is forward propagation?

Forward propagation is a fundamental process in neural networks that involves moving input data through the network to produce an output. It’s essentially the process of feeding input data into the network and computing an output value through the layers of the network.

During forward propagation, each neuron in the network receives input from the previous layer, performs a computation using weights and biases, applies an activation function, and passes the result to the next layer. This process continues until the output is generated. In simple terms, forward propagation is like passing a message through a series of people, with each person adding some information before passing it to the next person until it reaches its destination.

Next, we will see the forward propagation algorithm in detail.

Forward propagation algorithm

Here’s a simplified explanation of the forward propagation algorithm:

- Input Layer: The process begins with the input layer, where the input data is fed into the network.

- Hidden Layers: The input data is passed through one or more hidden layers. Each neuron in these hidden layers receives input from the previous layer, computes a weighted sum of these inputs, adds a bias term, and applies an activation function.

- Output Layer: Finally, the processed data moves to the output layer, where the network produces its output.

- Error Calculation: Once the output is generated, it is compared to the actual output (in the case of supervised learning). The error, also known as the loss, is calculated using a predefined loss function, such as mean squared error or cross-entropy loss.

The output of the neural network is then compared to the actual output (in the case of supervised learning) to calculate the error. This error is then used to adjust the weights and biases of the network during the backpropagation phase, which is crucial for training the neural network.

I will explain forward propagation with the help of a simple equation of a line next.

We all know that a line can be represented with the help of the equation:

y = mx + b

Where,

- y is the y coordinate of the point

- m is the slope

- x is the x coordinate

- b is the y-intercept i.e. the point at which the line crosses the y-axis

But why are we jotting the line equation here?

This will help us later on when we understand the components of a neural network in detail.

Remember how we said neural networks are supposed to mimic the thinking process of humans?

Well, let us just assume that we do not know the equation of a line, but we do have graph paper and draw a line randomly on it.

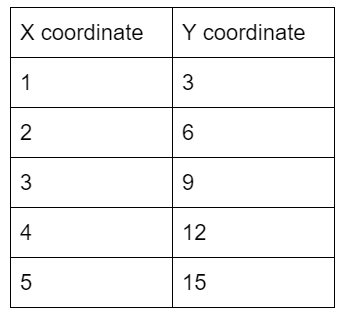

For the sake of this example, you drew a line through the origin and when you saw the x and y coordinates, they looked like this:

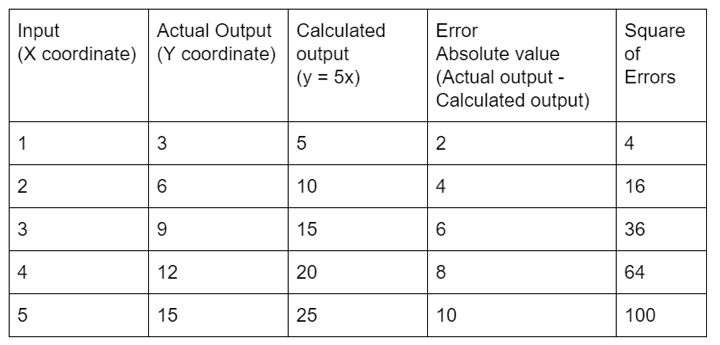

This looks familiar. If I asked you to find the relation between x and y, you would directly say it is y = 3x. But let us go through the process of how forward propagation works. We will assume here that x is the input and y is the output.

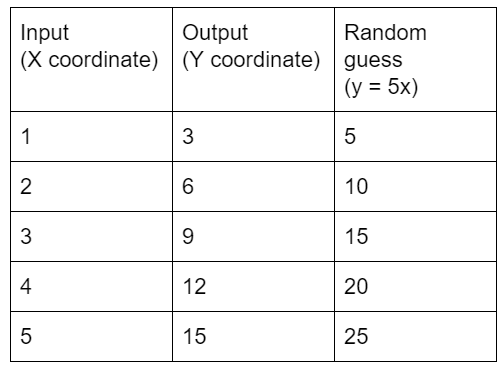

The first step here is the initialisation of the parameters. We will guess that y must be a multiplication factor of x. So we will assume that y = 5x and see the results then. Let us add this to the table and see how far we are from the answer.

Note that taking the number 5 is just a random guess and nothing else. We could have taken any other number here. I should point out that here we can term 5 as the weight of the model.

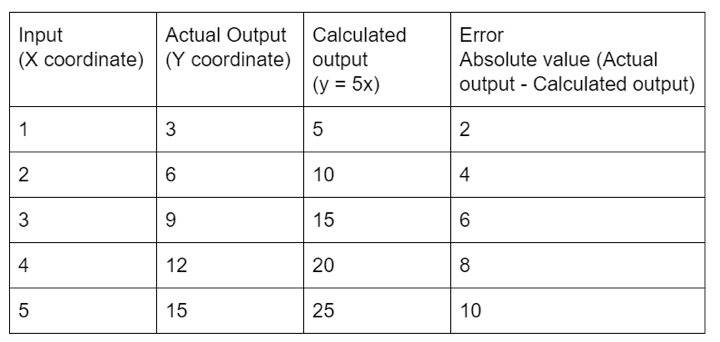

All right, this was our first attempt, now we will see how close (or far) we are from the actual output. One way to do that is to use the difference between the actual output and the output we calculated. We will call this the error. Here, we aren’t concerned with the positive or negative sign and hence we take the absolute difference of the error.

Thus, we will update the table now with the error.

If we take the sum of this error, we get the value 30. But why did we total the error? Since we are going to try multiple guesses to come to the closest answer, we need to know how close or how far we were from the previous answers. This helps us refine our guesses and calculate the correct answer.

Wait. But if we just add up all the error values, it feels like we are giving equal weightage to all the answers. Shouldn’t we penalise the values which are way off the mark? For example, 10 here is much higher than 2. It is here that we introduce the somewhat famous “Sum of squared Errors” or SSE for short. In SSE, we square all the error values and then add them. Thus, the error values which are very high get exaggerated and thus, help us in knowing how to proceed further.

Let’s put these values in the table below.

Now the SSE for the weight 5 (Recall that we assumed y = 5x), is 145. We call this the loss function. The loss function is important to understand the efficiency of the neural network and also helps us when we incorporate backpropagation in the neural network.

All right, so far we understood the principle of how the neural network tries to learn. We have also seen the basic principle of the neuron. Next, we will see the forward vs backward propagation in the neural network.

Author: Chainika Thakar (Originally written by Varun Divakar and Rekhit Pachanekar)

Originally posted on QuantInsti.

Stay tuned for Part II to read about forward vs backward propagation in neural network.

Disclosure: Interactive Brokers

Information posted on IBKR Campus that is provided by third-parties does NOT constitute a recommendation that you should contract for the services of that third party. Third-party participants who contribute to IBKR Campus are independent of Interactive Brokers and Interactive Brokers does not make any representations or warranties concerning the services offered, their past or future performance, or the accuracy of the information provided by the third party. Past performance is no guarantee of future results.

This material is from QuantInsti and is being posted with its permission. The views expressed in this material are solely those of the author and/or QuantInsti and Interactive Brokers is not endorsing or recommending any investment or trading discussed in the material. This material is not and should not be construed as an offer to buy or sell any security. It should not be construed as research or investment advice or a recommendation to buy, sell or hold any security or commodity. This material does not and is not intended to take into account the particular financial conditions, investment objectives or requirements of individual customers. Before acting on this material, you should consider whether it is suitable for your particular circumstances and, as necessary, seek professional advice.

Join The Conversation

If you have a general question, it may already be covered in our FAQs. If you have an account-specific question or concern, please reach out to Client Services.