Convolutional networks have gained immense popularity recently. You must be wondering what is convolutional network?

Convolutional neural networks (CNN) is a part of deep learning technique that is mainly used for image recognition and computer vision tasks. Since data visualisation is an integral concept of algorithmic trading, CNN is widely used for the same.

The key characteristic of a CNN is its ability to automatically learn and extract features from raw input data through the use of its convoluted layers. These layers apply a set of filters (also called kernels) to the input data for learning.

These filters enable the network to detect different patterns and features at multiple spatial scales. The filters slide over the input data, performing element-wise multiplications and summations to generate feature maps.

Let us now go through a brief introduction of convolutional neural networks before starting with the full fledged blog on CNN concerning the trading domain.

Layers of convolutional neural networks

We will begin with learning about the layers of convolutional neural networks.

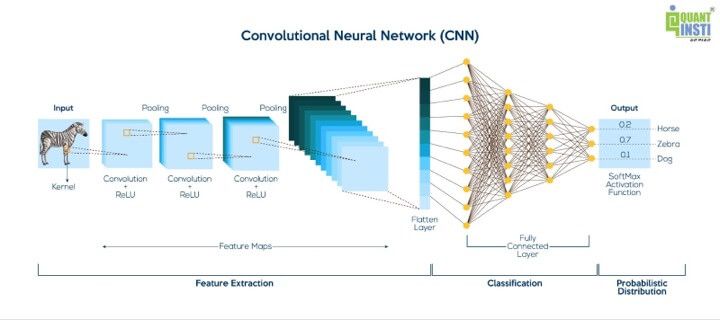

CNNs include different types of layers, such as pooling layers and fully connected layers.

Pooling layers reduce the spatial dimensionality of the feature maps, thus, reducing the number of parameters and computations in subsequent layers. Hence, they allow the network to be more robust to small spatial translations or distortions in the input data.

The fully connected layers are responsible for the final classification or regression tasks, where the learned features are combined and mapped to the output labels.

Going forward, let us find out, briefly, the working of a convolutional neural networks.

How do convolutional neural networks work?

In general, the working of CNN can be seen above in which the system takes an image as input and passes it through CNN layers and fully connected layers where features are extracted and learned. Fully connected layers also perform classification or regression tasks, depending on the specific objective, to give the output layer.

To give an overview of the working, it goes as follows.

Feature extraction

- Input layer: The first step is to define the input layer, which specifies the shape and size of the input images.

- Convolutional layer + ReLU or the feature maps: The convolutional layer performs convolution operations by applying filters or kernels to the input images. These filters or kernels extract local features from the images, capturing patterns such as edges, textures, and shapes. This process creates feature maps that highlight the presence of specific features in different spatial locations. After the convolution operation, an activation function (ReLU) is applied element-wise to introduce non-linearity into the network.

- Pooling layer: Pooling layers are used to downsample the feature maps generated by the convolutional layers. This layer reduces their spatial dimensions while retaining the most important information.

Classification

- Flatten layer: At this stage, the feature maps from the previous layers are flattened into a 1-dimensional vector. This step converts the spatial representation of the features into a format that can be processed by fully connected layers.

- Fully Connected layer: Fully connected layers are traditional neural network layers where each neuron is connected to every neuron in the previous and next layers. These layers are responsible for learning high-level representations by combining the extracted features from the previous layers. The fully connected layers often have a large number of parameters and are followed by activation functions.

Probabilistic distribution

- Output layer: The output layer is the final layer of the network, responsible for producing the desired output. The number of neurons in this layer depends on the specific task. For example, in image classification, the output layer may have neurons corresponding to different classes. Also, a softmax activation function is often used to convert the output into probability scores. These scores are the prediction figures during tasks such as, predicting prices of financial instruments.

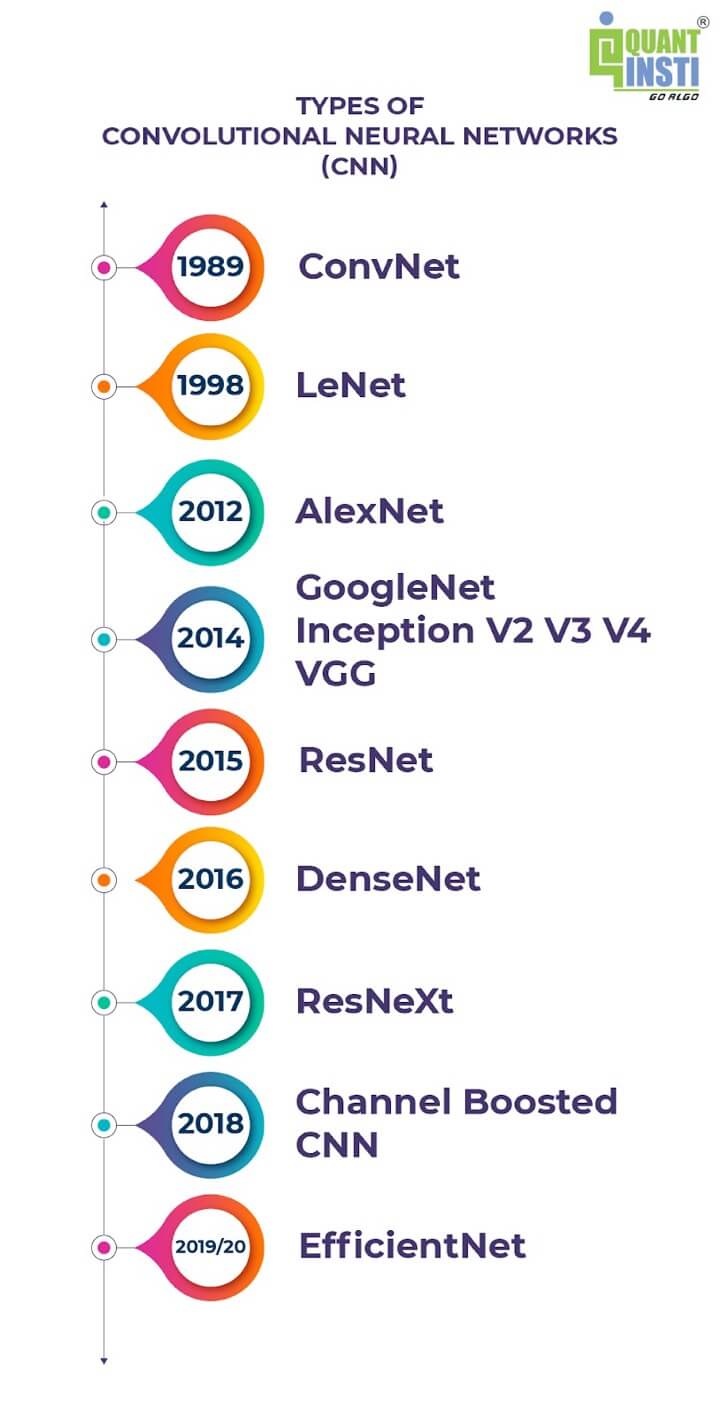

Types of convolutional neural networks (CNN)

Also, there are some technical terms associated with CNN’s types to help you learn about each type and its purpose. You can see them below.

The above image shows each type of CNN introduced in a particular time frame. Hence, the timeline goes as follows.

- ConvNet (1989) – ConvNet is nothing but short for convolutional neural networks. ConvNet is a specific type of neural network architecture designed for processing and analysing visual data, such as images and videos. ConvNets are particularly effective in tasks like image classification, object detection, and image segmentation.

- LeNet (1998) – LeNet, short for LeNet-5, is one of the pioneering convolutional neural networks (CNN) architectures developed by Yann LeCun et al. in the 1990s. It was primarily designed for handwritten digit recognition and played a crucial role in advancing the field of deep learning.

- AlexNet (2012) – AlexNet is a CNN architecture that gained prominence after winning the ImageNet Large Scale Visual Recognition Challenge (ILSVRC) in 2012. It introduced several key innovations, such as the use of Rectified Linear Units (ReLU), local response normalisation, and dropout regularisation. AlexNet played a significant role in popularising deep learning and CNNs.

- GoogleNet or Inception V2,V3, V4 (2014) – GoogLeNet, also known as Inception, is an influential CNN architecture that introduced the concept of “inception modules.” Inception modules allow the network to capture features at multiple scales by using parallel convolutional layers with different filter sizes. This architecture significantly reduced the number of parameters compared to previous models while maintaining performance.

- VGG (2014) – The VGG network, developed by the Visual Geometry Group (VGG) at the University of Oxford, consists of 16 or 19 layers with small 3×3 filters and deeper architectures. It emphasised deeper networks and uniform architecture throughout the layers, which led to better performance but increased computational complexity.

- ResNet (2015) – Residual Network (ResNet) is a groundbreaking CNN architecture that addressed the problem of vanishing gradients in very deep networks. ResNet introduced skip connections, also known as residual connections, that allow the network to learn residual mappings instead of directly trying to learn the desired mapping. This design enables the training of extremely deep CNNs with improved performance.

- DenseNet (2016) – DenseNet introduced the idea of densely connected layers, where each layer is connected to every other layer in a feed-forward manner. This architecture promotes feature reuse, reduces the number of parameters, and mitigates the vanishing gradient problem.

- ResNext (2017) – ResNext is an extension of ResNet that introduces the concept of “cardinality” to capture richer feature representations. It uses grouped convolutions and increases the model’s capacity without significantly increasing the computational complexity.

- Channel Boosted CNN (2018) – Channel Boosted CNN aimed to improve the performance of CNNs by explicitly modelling interdependencies between channels. It employed a channel attention mechanism to dynamically recalibrate the importance of each channel in the feature maps.

- EfficientNet (2019/20) – EfficientNet used a compound scaling method to balance model depth, width, and resolution for efficient resource utilisation. It achieved state-of-the-art accuracy on ImageNet while being computationally efficient, making it suitable for mobile and edge devices.

The blog will also talk about the uses and applications of CNN in trading.

It will help you understand how you can use this type of deep learning system in order to make informed decisions and for creating trading strategies that result in desirable returns.

Last but not least, the Python code implementation will be discussed in the blog for training the CNN model to provide you with the best predictions (as per your parameters).

In the trading domain, the performance and effectiveness of a CNN depend on the quality of the data, the design of the model architecture, and the size and diversity of the training data.

This blog will cover the convolutional neural networks or CNN with the help of examples. The examples will help you learn about CNN and its working in the trading domain.

Let us dive deeper into the topic of convolutional neural networks and find out about CNN for trading.

This blog covers the following in detail:

- Using convolutional neural networks in trading

- Working of convolutional neural networks in trading

- Steps to use convolutional neural networks in trading with Python

Stay tuned for the next installment to learn about using convolutional neural networks in trading.

Originally posted on QuantInsti Blog.

Disclosure: Interactive Brokers

Information posted on IBKR Campus that is provided by third-parties does NOT constitute a recommendation that you should contract for the services of that third party. Third-party participants who contribute to IBKR Campus are independent of Interactive Brokers and Interactive Brokers does not make any representations or warranties concerning the services offered, their past or future performance, or the accuracy of the information provided by the third party. Past performance is no guarantee of future results.

This material is from QuantInsti and is being posted with its permission. The views expressed in this material are solely those of the author and/or QuantInsti and Interactive Brokers is not endorsing or recommending any investment or trading discussed in the material. This material is not and should not be construed as an offer to buy or sell any security. It should not be construed as research or investment advice or a recommendation to buy, sell or hold any security or commodity. This material does not and is not intended to take into account the particular financial conditions, investment objectives or requirements of individual customers. Before acting on this material, you should consider whether it is suitable for your particular circumstances and, as necessary, seek professional advice.

Join The Conversation

If you have a general question, it may already be covered in our FAQs. If you have an account-specific question or concern, please reach out to Client Services.